It’s becoming one of the defining questions of our time: Human Hemingway, or ChatGPT Chekov?

The latest webinar from the sales pros at 30 Minutes to President’s Club was a contest that pitted human authors against artificial intelligence to determine who writes the best cold emails. Using generative AI is now as easy as sending a text message, but questions abound about the quality of its output.

Will you, as a sales rep, generate positive ROI by switching your workflow to ChatGPT to help you write emails, or would you be wasting time fiddling around with the latest tech fad?

As always, we learned a lot from the 30MPC team and their guests. During the webinar, polls captured audience sentiment after each round, identifying the most popular message. But we were left wondering: objectively, which method crafted the best email — which author would be more likely to get a reply?

One unbiased way to pick a winner would be to start sending them out and compare the number of replies. But in addition to taking too long, the problem with that approach is that we’d burn through our whole list of recipients before getting an answer. It’d be ideal to get objective feedback before hitting send.

And fortunately, Boomerang has just the tool to do it!

Meet Respondable, the AI email assistant

Respondable is Boomerang’s real-time, artificial intelligence-powered email assistant. Based on data from hundreds of millions of real emails, it uses machine learning algorithms to evaluate a draft as you type.

Respondable provides feedback on seven key parameters of email content that have the most impact, according to the data, on your recipient’s likeliness to send you a reply. For each metric, Boomerang displays both the optimal level and how your draft-in-progress compares.

Four scores measure objective criteria:

- email subject length

- word count

- question count

- overall reading level

There are also three indicators for the tone of your content:

- positivity

- politeness

- subjectivity

Watch the scores change as edits are made to this draft:

By keeping an eye on Respondable’s feedback, you can fix any issues with your email before you fire away. In doing so, you’ll increase your chances of receiving a reply.

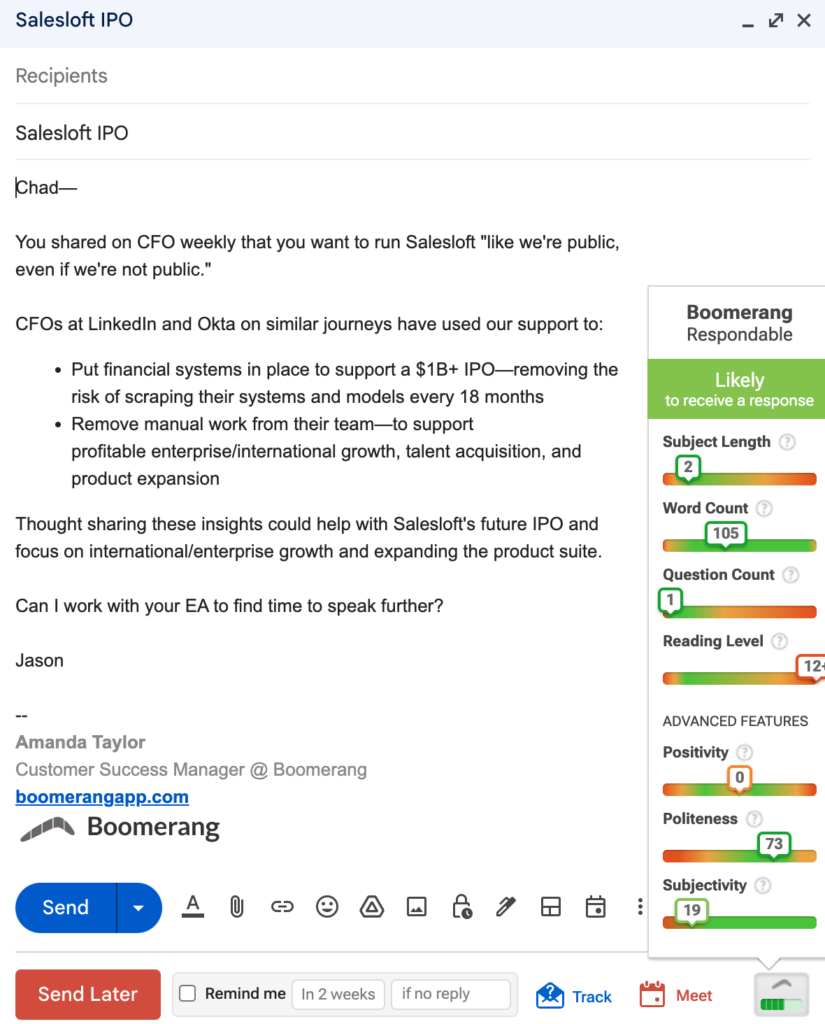

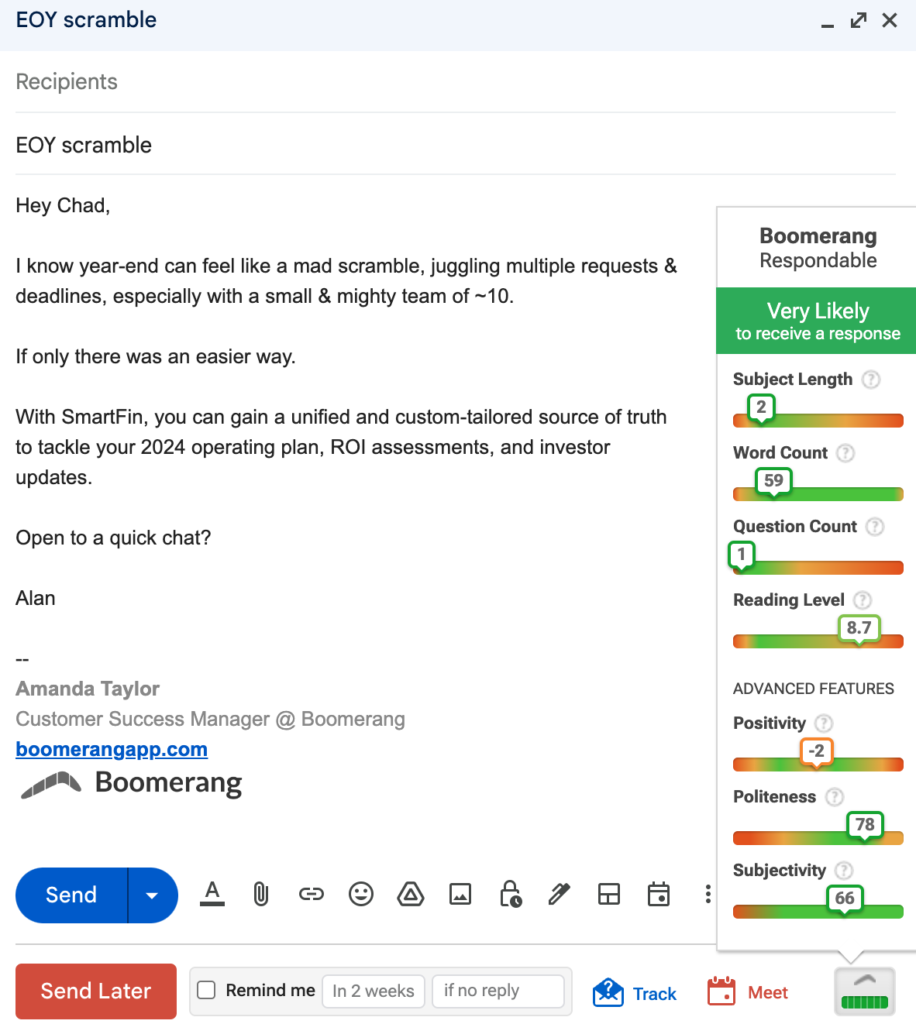

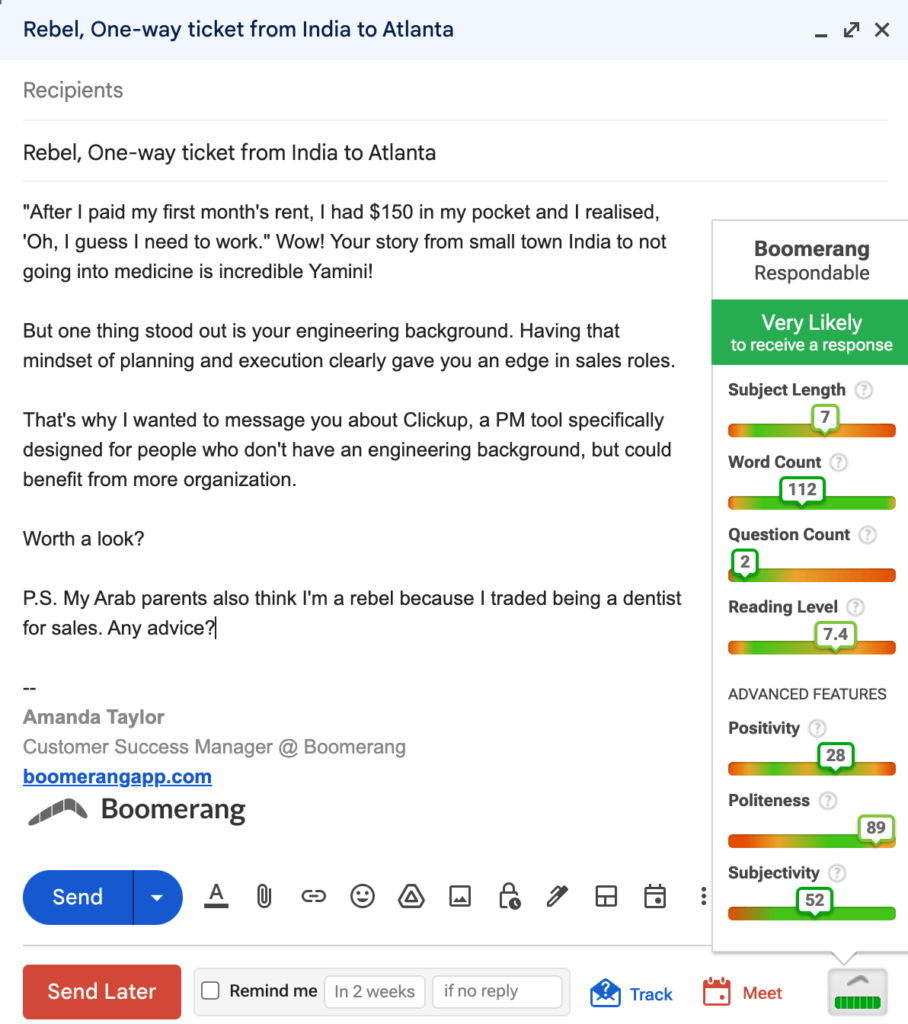

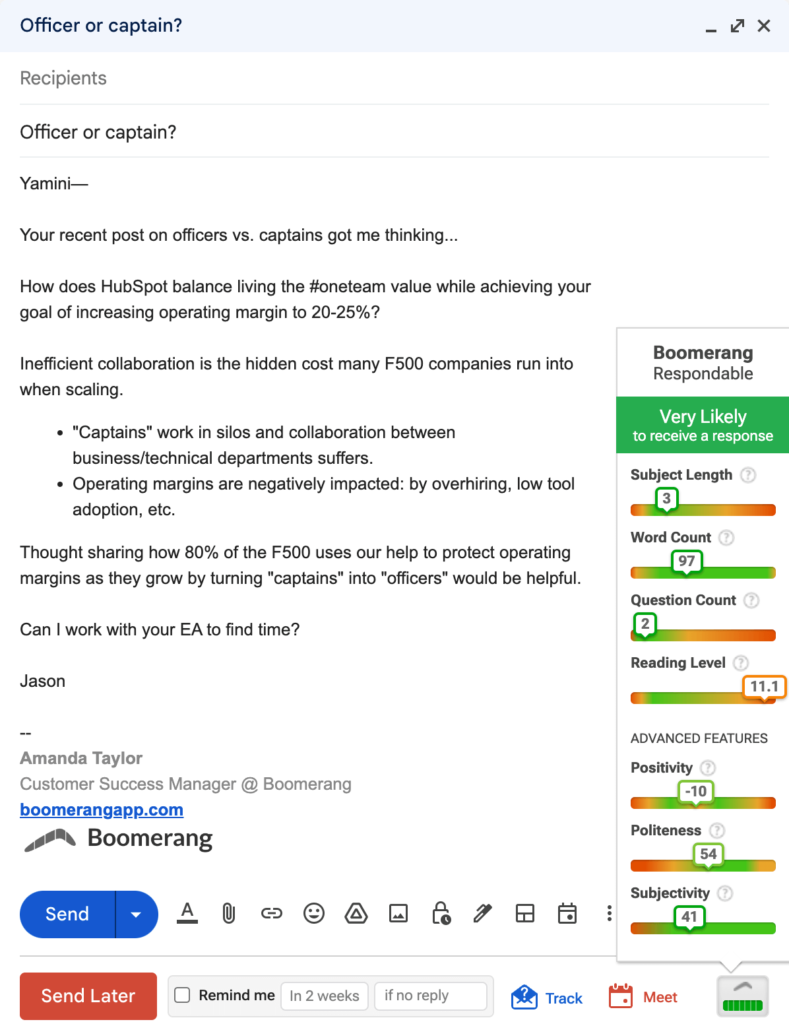

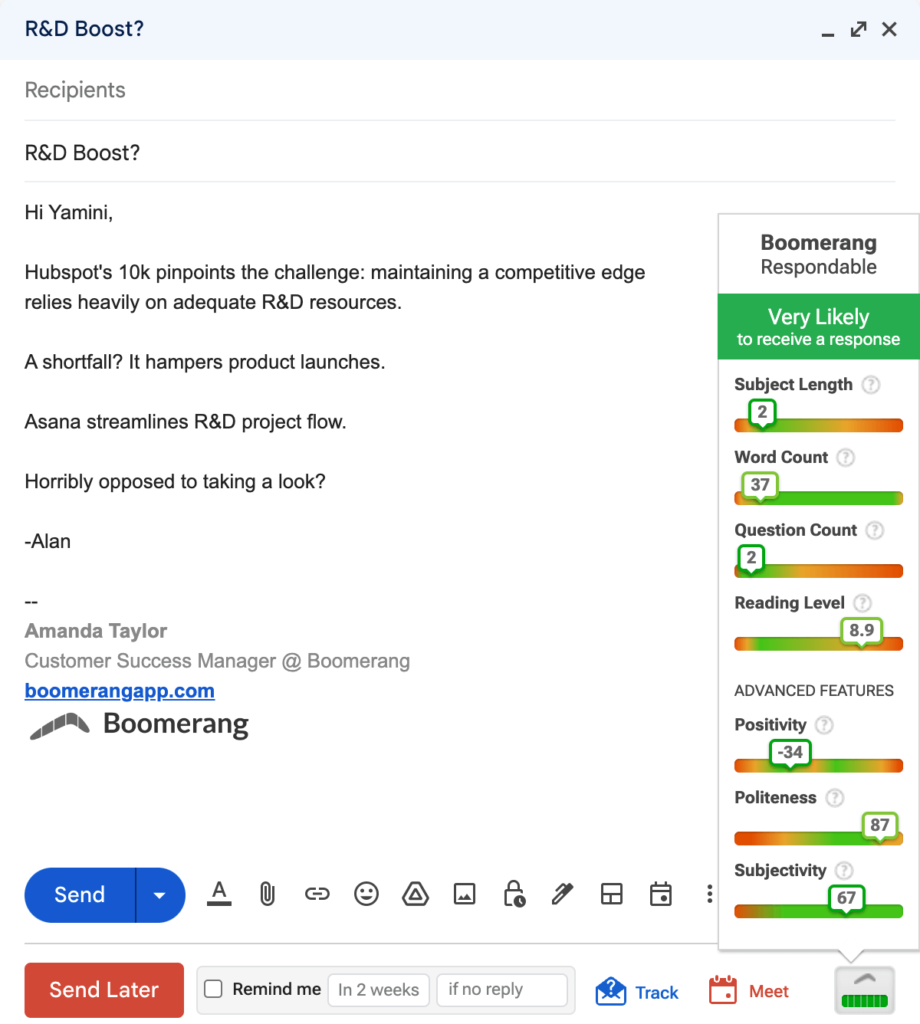

How the human-written and AI-generated email drafts scored

To evaluate the human- and AI-written messages from 30MPC’s webinar, we pasted each version of email copy into a new Gmail draft and took a peek at Respondable’s feedback. We captured screenshots of each one.

Here’s how the cold email drafts performed…

Human vs. AI, round 1: Pitching an energy drink

Belal crafted his message on his own. Despite a less traditional structure, Respondable gave his draft flying colors.

Alan prompted AI to generate a message for him, which looks quite successful as well. Something to keep an eye on, though, is the Reading Level creeping up towards an undesirable range.

Human vs. ChatGPT, round 2: Approaching an executive about accounting software

Jason’s bullets made this message to an executive easy to digest, but Respondable evaluated the Reading Level at a Grade 12+. That’s way above the optimal score of a third grade vocabulary, which would have been more likely to get a response.

Alan, with ChatGPT’s help, came out on top in this head to head. (Or is it ChatGPT with Alan’s help…?)

Human vs. ChatGPT, round 3: Collaboration software for a billion-dollar business

Last but not least, the round robin. Jason vs. Belal vs. Alan/ChatGPT. No surprise that each of these messages got top marks despite three different approaches — these guys are sales experts.

So, who won, and more importantly, what did we learn?

Human or AI — which was best?

The moment you’ve all been waiting for. Who was the Sales Shakespeare?! It was super close, but we have to hand the trophy to ChatGPT, with a well-deserved honorable mention for Alan’s razor-sharp prompts. Respondable’s machine learning gave each draft from the AI writer top marks.

Three tips for getting a reply to improve your email productivity

Reading Level is a blindspot easily overlooked if you’re not getting feedback in your email composer.

Jason (human, just to be clear) got a demerit from Respondable for a draft that clocked in at college-level prose. But one of Alan’s was approaching the danger zone, above Grade 9, with ChatGPT’s help. If you’re using AI to write an email, try including instructions about the reading level in your prompt. Why reading level is key to getting email replies, and how to improve it »

No more than two questions in a cold sales outreach email!

This is second nature to Jason, Belal, and Alan, which is why there weren’t any red flags in Respondable’s scores above. But this one is essential, so we’re calling it out on its own.

In a more general context, our AI algorithms have determined that your email doesn’t become less likely to receive a response until you stuff four or more questions in the body, but it’s a different story for sales specifically. Less is more when you’re contacting someone new. Learn more about why it’s important to ask a question, but not too many »

Even the most experienced sales pros could benefit from an editing assistant.

Whether you’re cranking out pitches at the speed of light with the help of AI or hand-crafting your drafts the “old-fashioned way,” it helps to have a sidekick.

Respondable can be your extra set of artificial intelligence eyes — or AI-yes 🙂 — to increase your chances of getting a response. It gives you objective certainty before you click send.

Interested in learning more about how you can improve the chances you’ll get a reply to emails? You’ll love 7 Tips for Getting More Responses to Your Emails (With Data!).

Many thanks to Alan Shen and the whiz kids at 30MPC and guest hosts Vin Matano, Jason Bay, and Belal Batrawy for an awesome webinar.

Install Boomerang’s AI email assistant

And last but not least, Respondable is free to use once you install Boomerang for Gmail and Outlook.