Desktop search has come a long way in the past few years. In this post, we’ll explore how the technology behind all of the major desktop search options has changed based on web search innovations. In the follow-up posts, we’ll talk a little bit about how desktop search is different from web search and how it has both succeeded and failed at making interacting with our computers better. We’ll share a few tricks for getting more out of Desktop Search and a few things we wish it could do. We’ll also share a little bit about how Baydin plans to fill in the gaps.

There are two major advantages to a modern desktop search experience: the first is that searching for a document is a lot faster than it used to be, and the second is that in virtually all file types, the text inside the document is searchable, instead of just the filename.

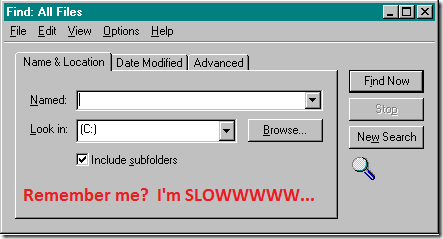

Think back to the file search in Windows 95. It was pretty terrible. All it could do was search for filenames, and it took the better part of eternity to find anything. Here’s why: when someone searched for a word, Windows opened the file system and looked at every single file it had. It compared the search query with the filename for each file, and as it found matches, it added the files to the results listing. Every time a new search started, Windows had to look at every single file, which is why the results trickled in over a period of a few minutes. If the search term were somewhere in a document or in an email rather than in the filename of a physical file, we were pretty much out of luck.

Searching the full text of documents was beyond the pale. To do that, Windows would need to open every single file as it came across them and extract the text. It would have been slower than slow, it would have required every piece of software that saved any kind of document to provide hooks for Windows to extract the text, and it probably would have made the computer rottenly unstable.

Searching through email in Office (up until 2003) used the same method, but since every email had a known structure, Outlook could search through the full text of messages. When a user started searching for something, Outlook opened the most recent email and compared the search terms against each word in that email. If there was a match, it would add the email to the result list in real-time. When it finished with the most recent email, it would move on to the next, then to the next, then to the next. Searching through email was a slow process, but it would eventually yield results where the terms were found only in the text of emails.

A real innovation happened, though, when software developers realized that the same technology that powers web search engines could be applied to the desktop.

When someone clicks the search button on a web search engine, the search engine responds in a totally different way from Windows 95-style search. Google does not crawl every page on the web, word for word, comparing the search terms for a match. Instead, Google just looks in a previously-generated database where they already have prepared a list of all the web pages that contain the search term (and a bunch of other information that helps them order the results!)

Instead of sifting through every word ever written on the Internet in real time, Google crawls each page on the web only every few hours, days, or weeks depending on how important a site is and how frequently its content changes. When Google crawls a site, its crawler looks through every page, processes every term, and updates the database.

Very crudely, that index looks like this:

All Google has to do when you search for “chicken” is find that index and list the results.

Of course, that’s a sweeping simplification – it doesn’t address multiple-term searches, result order, or the fact that the index is HUGE and difficult to maintain. There are dozens of fantastic papers from Google engineers that explains a lot of the details; try http://labs.google.com/papers for a listing, or start here for an overview from when Sergey and Larry were still at Stanford. But for the purposes of this post, that’s all we need to worry about.

Creating and maintaining a mapping from search terms to web pages is the critical innovation for desktop search. The idea extends quite well to our individual computers. Instead of a mapping from terms to web pages, though, we need to make a mapping from terms to documents. So the problem is a little bit harder in that we have to be able to index a whale of a lot of document types instead of just HTML, but it is a lot easier in that the index size is nowhere near as large as the index for the web. It can be generated relatively fast (probably under an hour for the average computer) and does not require a lot of space.

Google Desktop Search, Windows Desktop Search, and all the competitors do exactly this. Their indexer runs in the background, opens every file on the computer, and creates a database in the same format as the web databases above:

When I search on my computer for a word, like the web search engines, all my computer now has t

o do is look in that index and find the already-generated list of files that match my term.

The key takeaway is that thanks to these indexes, searching through the full text of every file on a computer is now thousands of times faster than just searching the filenames used to be.